Subjects in self-contact

- TRAIN set: 4 subjects

- TEST set: 2 subjects

In each recording, the subject is motion tracked with a marker-based motion capture system (Vicon).

|

|

Multiple cameras

- 4 different views

- 900x900 resolution

- 50 fps

- Camera parameters:

- extrinsics

- intrinsics for 2 different camera models (one assuming image distortion, one ignoring it)

- The TEST set consists of only one random frontal camera viewpoint per sequence, to avoid simplifying the 3D Reconstruction challenge through multi-view triangulation/optimization.

|

|

Various self-contact actions in various scenarios

Each subject performs the following actions.

- standing (116 scenarios)

- sitting on the floor (20 scenarios)

- interacting with a chair (36 scenarios)

|

|

GHUM and SMPLX meshes

- Ground-truth, well-alligned mesh - obtained by fitting the GHUM model to accurate 3d markers, multi-view image evidence and body scans

- We retarget the GHUM meshes to the SMPLX topology and provide pose and shape parameters for both

- 50 fps

|

|

3D skeletons

- Ground-truth 3d skeletons with 25 joints (including the 17 Human3.6m joints)

- 50 fps

|

|

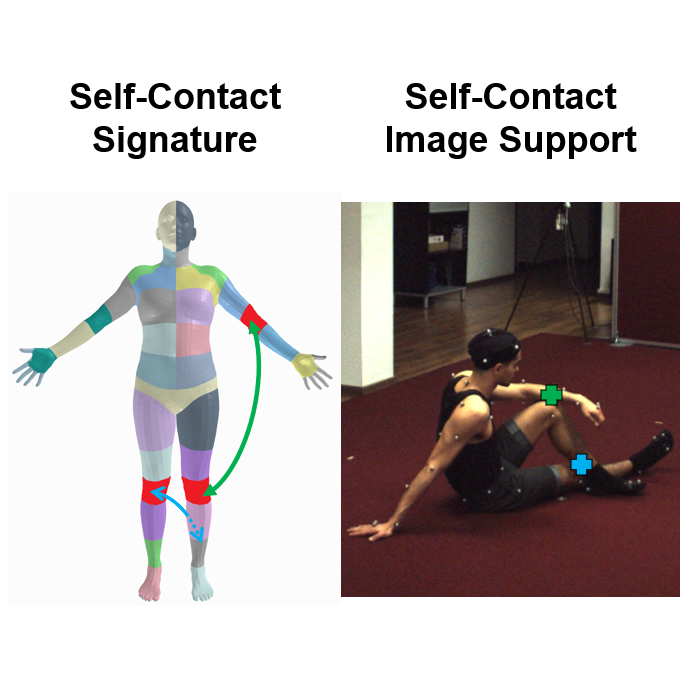

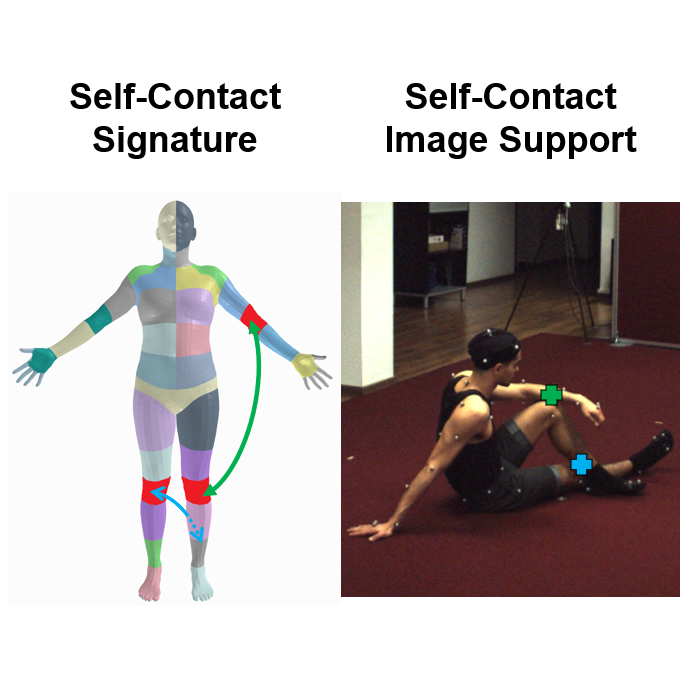

Self-contact annotations

Each of the 1032 recordings contain:

- 1 video timestamp where self-contact is established

- the self-contact signature annotation (multiple correspondences on the body surface between two triangle ids / vertex ids / region ids)

- the self-contact image support annotation (the projection of each self-contact vertex correspondence in the image)

Due to the 4 viewpoints, this amounts to 4128 triplets of images, self-contact signatures and self-contact image support.

|

|